The ongoing push for digitizing petrochemical industries has some valid reasons for plant operators reluctance to it. While certain digitalization elements deserve a praise in a generic context, applicability constraints do apply in specific areas. One of such challenging applications is the Asset Integrity market, e.g. engineering problems and services relevant to operational degradation of equipment and its failure risk control. We firstly glance at the eight categories of novel offerings, as shown in the picture, in this very context. Then we will outline one adequate solution for a failure risk control effective upgrade towards better confidence, sustainability and safety. This is achievable right now and at a minimum change requirement.

Disclaimer: This article is not a comprehensive text on the above digitalization pillars. It outlines a high level critical thinking about digitalization elements implementation constraints in corrosion and cracking risk management at petrochemical plants. It has a format of discussion, not a prescription or a professional advice. A wealth of extra information about AI and related topics, including videos, is here: https://primo.ai/

Will your plant get leaner or more agile upon digitizing it?

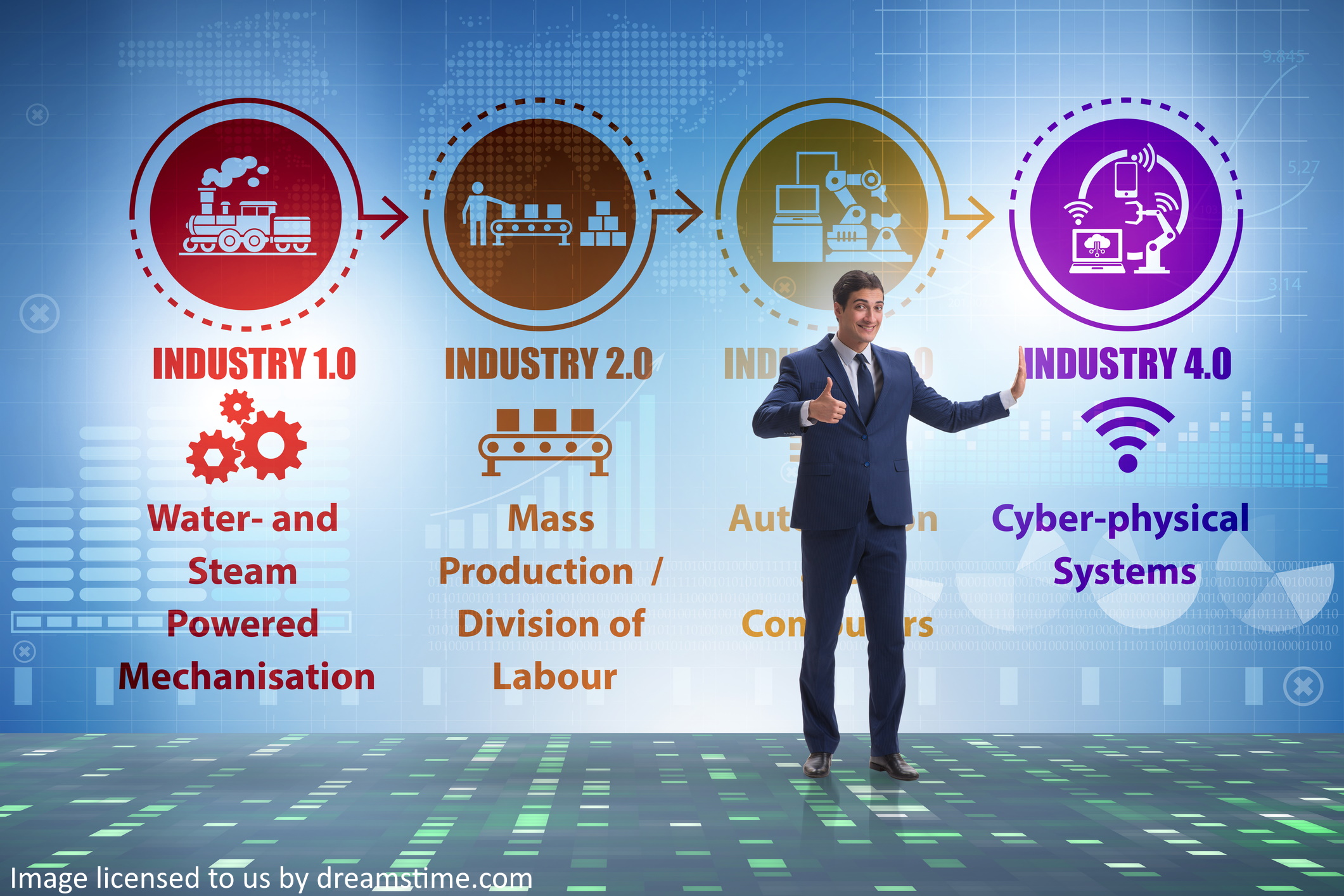

The sibling concept of Industry 4.0, employing all the above technologies, does apply well to manufacture processes themselves. The goal of this promoted change is establishing an agile plant. Agile plant can quickly adapt to external changes via flexibility of production and IT assisted effective interaction between staff, consumers and equipment. An example could be on-demand production with a maximum degree of automation using ‘smart’ equipment and only few highly skilled workers.

However, production isn’t the only business process across industries. An enterprise sustainability in many sectors (energy, civil, transportation and more) also relies on Asset Management and Risk Management frameworks. Efficient plant failure risk management is crucial for maintaining commercial obligations of a continuous supply (in oil and gas sector), and for personnel and environment safety (at hazardous plants). Thus, assets risk management quality affects such a business sustainability.

By this very reason, equipment integrity and reliability management systems, tools and activities consume a substantial part of petrochemical plants operational expenses. The challenges of digitizing such become visible from answering three questions:

- Will a technological novelty actually improve the risk control?

- Will that be a regulatory compliant solution?

- Will that solution remain effective and sustainable in the future?

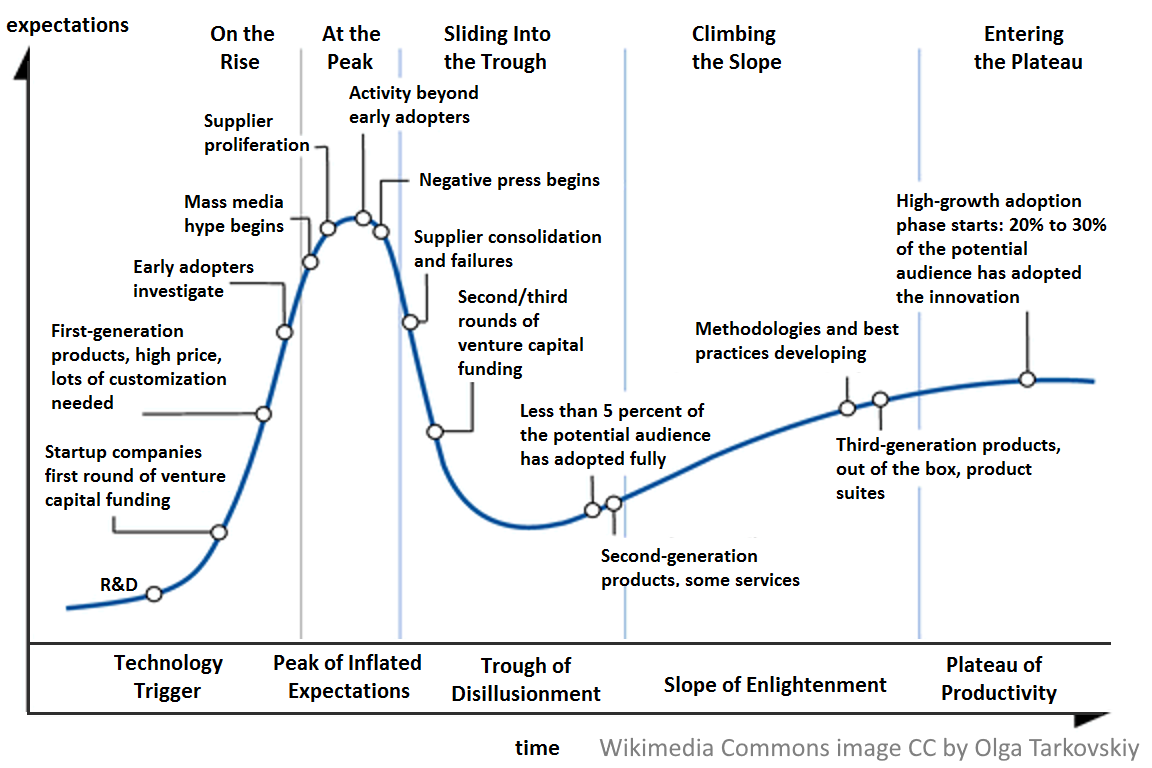

Namely, intake of breakthrough technologies firstly peaks to a maximum and then relaxes to a lower average level. This is shown by the next Hype Cycle schematic from this Wikipedia article.

Regarding the digitalization trend, it looks like we are approaching the initial adoption peak from the left right now. And what is coming next might be a big delusion. Thus, today’s digitalization investments will add extra technical complexity to the plant, with a sound perspective to be replaced by more mature solutions in a near future. This situation contradicts the Lean business motive (e.g. minimizing waste of resources and optimizing process).

Now, let’s examine the today’s digi-tools in more detail, in the failure risk management context:

1. Virtual Reality, VR: Digital Twins

Virtual reality renders visual scenes different from what we see, and from what actually exists in our surroundings. Rendering can be done using ordinary computer displays, VR glasses and projectors. Digital twins are non-tangible virtual reality products, they are digital clones of real objects made of program code and data.

In petrochemical industries, service offers exist for industrial plants 3D laser scanning, generation of drawings and models, as well as computer display visualizations. They facilitate worker’s training and reduce the number of site visits for added safety. Even more astonishingly, superposition of physical data on these 3D models is also feasible, such as:

- Equipment identification and maintenance status

- Process parameters visualization

- FEA and corrosion data of individual equipment (e.g. pressure vessels and piping circuits)

Latter option, however, is compromised by a low potential for a practical utilization. This is because integrity risk analysis is traditionally uses a single ‘worst case’ data point and the wealth of integrity information available (and visualized in such a way) remains unused in the risk control. We can create a pressure vessel twin visualizing its internal corrosion map. But sadly, a conventional Risk Based Inspection planning (RBI) will use only one (worst) corrosion location from this map. So, why do we need that map and that twin? This is the first shortcoming (which we will resolve in the end of this post), further augmented by the second one:

-

- High time, cost and computational power requirements

In the context of corrosion and cracking risk management, plant equipment digital twins currently appear as luxurious extras. They can be prone to a future technology evolution and require Cloud platforms we will discuss below.

2. Augmented Reality, AR: Wearable Devices

In contrast to 3D virtual reality, augmented reality solutions superimpose digital images on the surroundings we actually sense by our eyes and ears. It uses wearable devices, including tracking and position sensors to match the digital image to our actual coordinates and sight direction. To visualize AR information, we can use smart glasses, or even simpler, mobile and tablet cameras and their screens (remember Pokémon Go?). The added features can be:

- The above bullet points of equipment data visualization, plus:

- Workers health and environment condition monitoring (heartbeat, gas detection, IR data)

- Opportunity of office assistance/training/advice to field workers in real time.

This spectrum of AR features resembles its military applications. Their overview, pictures and videos are in this link: https://jasoren.com/augmented-reality-military/

This spectrum of AR features resembles its military applications. Their overview, pictures and videos are in this link: https://jasoren.com/augmented-reality-military/

And a concise overview of the augmented reality applications in oil and gas industry is here: https://aircada.com/augmented-reality-oil-gas/

Indeed, AR wearable technologies appear practical and ready to use in the asset integrity context. In particular, tag and testing point identification can be very useful for integrity inspections execution. These features, however, tie us into the IoT networks and Cloud computing as well.

3. Remotely Operated Vehicles, ROV: Drones, Crawlers, Submerged Robots

One highlight about remotely driven devices is that a connection to the a Cloud becomes optional. We can store the acquired data locally and then extract it to other devices on demand. ROVs were used in Integrity Inspections prior to the digitalization advent, they aren’t really novel.

One highlight about remotely driven devices is that a connection to the a Cloud becomes optional. We can store the acquired data locally and then extract it to other devices on demand. ROVs were used in Integrity Inspections prior to the digitalization advent, they aren’t really novel.

Let’s consider few example applications of ROVs:

- Drone surveys are helpful for surveying the condition of electricity poles. If supplemented by image recognition (using AI) such a venture contributes very well in the integrity management of electric distribution networks. Automated visual detection of anomalies by AI further simplifies this task. It’s a great example. However, in application to an industrial plant integrity, that won’t replace operational surveillance (daily walk-arounds). This is because humans possess more than vision – they have thinking capabilities, and can take physical actions immediately, unlike drones. Regulatory constraints also applicable here.

Another feature of drones – their ability to fly – is useful for tall and hardly accessible objects. An example is inspection of tall tanks and column vessels for corrosion using EMAT thickness sensors (which don’t require a thorough surface preparation unlike the conventional UT transducers). There are quite few service providers on the NDT market already, and the rope access elimination is obviously beneficial for safety. Nevertheless, drone applications are quite narrow, as a majority of an oil and gas plant equipment can use a ground level inspection. We are not yet there, nor willing, to host a garage of robo-dogs, or do we? And again, will that investment be justified?

Another feature of drones – their ability to fly – is useful for tall and hardly accessible objects. An example is inspection of tall tanks and column vessels for corrosion using EMAT thickness sensors (which don’t require a thorough surface preparation unlike the conventional UT transducers). There are quite few service providers on the NDT market already, and the rope access elimination is obviously beneficial for safety. Nevertheless, drone applications are quite narrow, as a majority of an oil and gas plant equipment can use a ground level inspection. We are not yet there, nor willing, to host a garage of robo-dogs, or do we? And again, will that investment be justified?- Submersible inspection robots are applied for in-service inspections of tank and vessel floors without draining and cleaning. That eliminates shutdowns and associated production loss. But again, majority of plant equipment doesn’t require such a degree of technological sophistication.

Considering the above options, we also need to distinguish three types of integrity condition examination, and make sure that our level of detail attention is compliant and risk adequate:

- Surveillance activities involve observations. They don’t provide any numeric outputs. This is the level of daily-like operational house keeping activities, which is mandatory at hazardous plants. Workers do always offer more features than modern robots, unless the equipment is hard to access.

- Monitoring term has rather a continuous meaning. CCTV cameras, leak detectors and process probes fall into this category. Note that application of ROVs can only be periodic to be cost effective.

- Inspection is a periodic activity providing numeric (quantitative) outputs, such as corrosion depth or crack size. This data numeric analysis enables a predictive risk control of potential integrity failures via . Integrity Management (such as RBI). RBI is a mandatory element of the plant Risk Management process, which is an integral part of the Asset Management framework. ROVs are valuable for data collection in specific cases, like outlined above. In-service inspections by certified personnel are mandated in hazardous industries, and do apply to a vast majority of plant equipment.

One topic to highlight here is the problem of integrity data analysis: Whatever are the particular means to collect the data, the funnel of outdated integrity analysis still diminishes the value obtained from integrity evaluations. Once again, we will outline our solution in the very end.

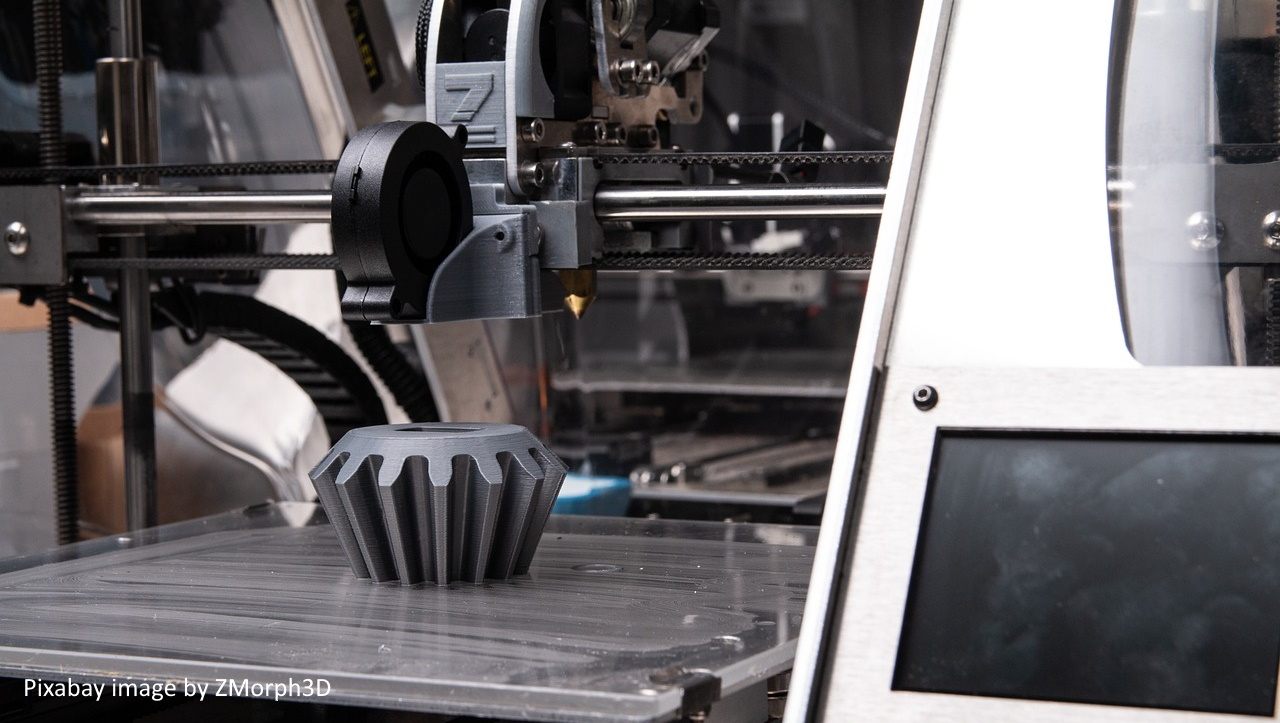

4. Additive Manufacture, AM: 3D printing

From everyday experience, we associate 3D printing with manufacturing parts of polymers (HDPE). On the industrial scale, however, there are technologies for smelting metallic powders, including practically all engineering alloys used in petrochemical industries.

From everyday experience, we associate 3D printing with manufacturing parts of polymers (HDPE). On the industrial scale, however, there are technologies for smelting metallic powders, including practically all engineering alloys used in petrochemical industries.

One feature of these methods is the ability to create complex parts with no need for bolting and welding. Main challenges of these parts’ compliance are varying quality levels and internal porosity risks. A wealth of relevant information is in this link: https://www.metal-am.com/

There are market offers for printing large metal parts on demand. They are rational for retrofitting unique or obsolete mechanical components, e.g. apply in specific situations. This option is good to know, however, printing metal parts on-site is not economical or ever needed today.

5. Cloud Computing and Data Storage

Cloud computing offers high computational power desirable for the Big Data analysis and AI algorithms. A concise list of Cloud platforms is here: https://www.devopsschool.com/blog/list-of-cloud-computing-platforms/ Needless to say: these services cost money. Unless we perform big data analysis and artificial intelligence processing, we won’t really need the computational powers of those clouds for integrity.

Another feature of a cloud infrastructure is the ability to store very large amounts of data. But where can the big data come from? From a rather excessive data acquisition (regarding the context or corrosion and cracking) by interrogating perhaps too numerous sensors continuously.

Now, let’s see the three catches of the Cloud storage:

- Digitizing your plant using VR, AR, ML, AI ties you into the (paid) cloud services automatically.

- Your data hosted on cloud platforms isn’t be in your full control anymore. There are cyber risks on the global scale yet to likely come.

- The new market of cyber insurance is emerging, now even down to retail businesses. What an excellent source of new revenues for the insurance sector! And who will be paying for it?

To wrap this item up, the motive of business data security becomes jeopardized by cloud storage options, and there should be a firm motive for using them. Also recall the Hype Cycle curve at this point.

By the way, we have developed an online but not-a-cloud integrity data management system running over the ‘old school’ SQL databases. It is well enough for inspection data of large plants.

6. Big Data Analysis (Quantitative options)

Firstly, we need to outline what is that analysis in essence. If we consider a numeric analysis (to obtain quantitative predictive outputs) extra value is extracted from big data using two general statistical approaches:

- Regression, which is effectively fitting a function to independent variables for predicting a target function value. Least squares method for function fitting is a basic example. More complex methods exist for treating multi-variable (multivariate) problems.

- Correlation, which is identifying what independent variables influence the target function most.

Specifically, both approaches become more complicated as the number of variables grows, and a need for dimensionality reduction arises. Use of relevant theorems of matrix algebra and matrices transformations identifies only few independent variables of a maximum influence on the target function (this is called Principal Component Analysis, PCA).

For mathematically savvy people, the following link will provide a wealth of details to browse in its left pane: https://www.javatpoint.com/machine-learning We’re not going into mathematical details here to keep the momentum.

Interestingly, we can run into a trap of correlating the moonshine intensity and a light pole growth function. This is to emphasize that big data tools are relevant where no 1st principle models apply. This happens in stochastic systems, or where just too many factors influence the target function.

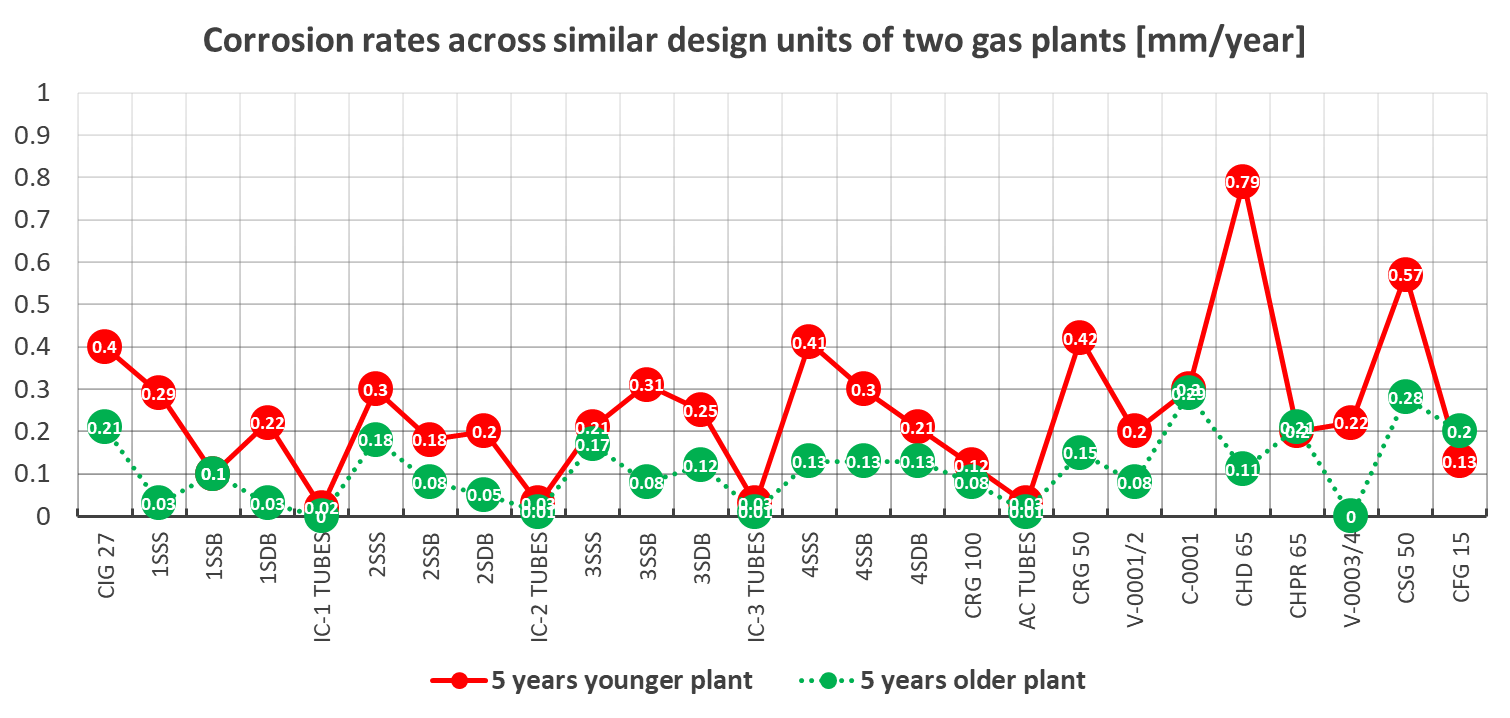

If we have physical models, we wouldn’t need such an abstraction. Otherwise we will miss the point of what is the problem’s actual physics. Physical laws are guaranteed to work, but regression and correlation aren’t. Examples are plant reliability and integrity challenges. Former have the well established theory for a century, and latter can use actual damage measurements, rather than constructing predictions from process parameters. To explain, next figure shows maximum corrosion rates in two similar design gas plants located nearby:

Isn’t it amazing that the younger plant (red) showed consistently higher corrosion rates across the units? And should we run into multi-variable data acquisition and correlation to predict this?

The answer is No. Firstly because our model carefully trained and validated today may not work tomorrow (as the feedstock composition changes). Secondly because the actual (evidential) corrosion data is available from the mandatory integrity inspection activities, and it serves as the ultimately true record of the individual integrity condition and the damage rate. There is no need to simulate a damage which has been measured. Permanent corrosion gauges will do this job, for example.

Another dangerous misunderstanding involved with ‘big data’ are attempts to use industry-wide statistics for individual objects of same type. This idea works in the reliability theory dealing with batch produced identical parts operating in similar (most often – lubricated) environments. But this approach is fundamentally wrong for static equipment corrosion and cracking. This is because individual equipment units experience individual damage rates (visible in the above graph). There is a little chance of matching individual items failure probabilities by factoring generic failure data (as it is done in API-RP-581). Literally, there is a zero chance, as probabilities don’t tolerate factors. We will see an alternative robust solution to such problems in the very end of this article.

In summary, correlation and regression of big data turns an integrity problem into a black box. And there are no guarantees that such a model will remain valid, as we abandon the 1st principles in this way.

7. Internet of Things (IoT)

The Internet of Things concept relates to the big data acquisition, storage and analysis. It isn’t just an Internet communication, but ranges from near-range wireless connections to a satellite data transfer. Particular network technologies are various, each addressing a certain communication range, including: RFID, NFC, Bluetooth, ISA100, Z-Wave, HART, 6LoWPAN, Zigbee, IEE802.15. Some of these also offer a mesh topology of the network to enable a multi-hop data transmission from a sensor through other sensors to a router. A good digest on Industrial IoT (IIoT) is here: https://www.techtarget.com/iotagenda/definition/Industrial-Internet-of-Things-IIoT

The Internet of Things concept relates to the big data acquisition, storage and analysis. It isn’t just an Internet communication, but ranges from near-range wireless connections to a satellite data transfer. Particular network technologies are various, each addressing a certain communication range, including: RFID, NFC, Bluetooth, ISA100, Z-Wave, HART, 6LoWPAN, Zigbee, IEE802.15. Some of these also offer a mesh topology of the network to enable a multi-hop data transmission from a sensor through other sensors to a router. A good digest on Industrial IoT (IIoT) is here: https://www.techtarget.com/iotagenda/definition/Industrial-Internet-of-Things-IIoT

The goal is that the (big) data acquired by sensors is then transmitted to a cloud, further analyzed (sometimes using AI) and then a feedback signal is sent to certain process control actuators. Such an automation is quite sophisticated, but works effectively for chemical plants process optimization. In particular, such models are feasible from unit operational tests in a whole spectrum of actuator settings (valve opening, media temperature, pressure etc.) and a consequent multi-variable optimization of the process unit operation. Particular IoT platforms can be Cloud based, Commercial of the shelf (COTS), and industry specific ‘point solutions’.

But if we think about corrosion and cracking sensing, a very different context applies:

- Such readings need not be taken continuously, to possibly minimize the data waterfall

- There are no actuators to feed back, except for control room warnings or alarms

- There is no reliable use of big data predictive models, as we discussed just above

All this suggests that IoT/Cloud technologies can be an ‘overkill’ for integrity inspection data. Nowadays, some providers of permanently mounted corrosion and cracking sensors offer choices of connectivity: either wireless Cloud data acquisition, or manual periodic interrogation using NFC techniques. Latter option appears more adequate in view of the above. Such solutions are, indeed, handy but viable for most critical equipment only. Manual UT gauging for internal corrosion seems to remain the most cost efficient option for years.

All this suggests that IoT/Cloud technologies can be an ‘overkill’ for integrity inspection data. Nowadays, some providers of permanently mounted corrosion and cracking sensors offer choices of connectivity: either wireless Cloud data acquisition, or manual periodic interrogation using NFC techniques. Latter option appears more adequate in view of the above. Such solutions are, indeed, handy but viable for most critical equipment only. Manual UT gauging for internal corrosion seems to remain the most cost efficient option for years.

8. Artificial Intelligence (AI) and Machine Learning (ML)

We already had a looked of the quantitative Machine Learning (ML) options (Regression and Correlation). Two other common applications of ML provide qualitative outputs: Classification (pass/fail) and Clustering (identifying different populations in data). Latter two algorithms found a successful use in marketing, social mechanics, image recognition and more.

ML is meant to deliver Artificial Intelligence (AI), and the keyword here is Artificial. All ML models need algorithm training using data samples (it often takes a long time). This is followed by a consequent validation of the mathematical model on a real data set. Notably, all such data analysis tools use mathematics, not physical laws.

One recent breakthrough assisting AI applications was the ongoing appearance of specialized Tensor and Neural Processing Units (TPU, NPU), which have a specific hardware architecture better suitable for Cloud big data analysis than CPUs and GPUs. Another option is using reconfigurable Field Programmable Gate Arrays (FPGA) or custom Application Specific Integrated Circuits (ASIC) for autonomous data processing.

One recent breakthrough assisting AI applications was the ongoing appearance of specialized Tensor and Neural Processing Units (TPU, NPU), which have a specific hardware architecture better suitable for Cloud big data analysis than CPUs and GPUs. Another option is using reconfigurable Field Programmable Gate Arrays (FPGA) or custom Application Specific Integrated Circuits (ASIC) for autonomous data processing.

A compendium of modern chip architectures is here: https://primo.ai/index.php?title=Processing_Units_-_CPU,_GPU,_APU,_TPU,_VPU,_FPGA,_QPU

Artificial Neural Networks (ANN) are predictive regression models usually having multiple inputs and a single output. Training is about assigning synaptic weights to variables and then summing up to produce an adequate output signal. Most advanced uses of ANNs in process control include Real Time Optimization (RTO) to varying process conditions and Model Predictive Control (MPC). Latter is a future parameters prediction from training on the sensors’ past data. Nevertheless, the following limitation applies:

- Factors beyond the training data set can’t be accommodated without a forced re-training. To explain, model training in process automation uses all possible combinations of process settings to find an optimum. And this is why such numeric models are successful. In contrast, integrity damage models can’t be trained to a full spectrum of operating conditions because:

- The feedstock composition for training can’t be varied certainly enough to reflect its future changes

- The damage takes a long time to progress, which implies practically infinite training spans

More importantly, trained AI decisions are non-traceable and non-transparent. As a result, using AI is equivalent to passing responsibility onto a non-controllable something, with no one to blame.

Getting back to the context of Asset Integrity, let’s imagine AI applications to control corrosion and cracking failure risks:

- It is impractical to change process parameters for a latent damage alleviation (this will drive a conflict of process and integrity optimization goals), and therefore, no feedback to process controls applies.

- AI could contribute into Integrity Operating Windows (IOW) systems, which can generate alarms for human personnel to follow up. But what specific function will such an AI system perform then?

- As we seen above, correlation and regression of a damage rate to process parameters is less reliable than measuring that damage directly. Hence, periodic inspections or, even better, permanent sensors will supply more confident data to the risk management system, rather than ANNs.

- Using industry wide failure statistics for individual equipment corrosion and cracking is not reliable either, as we discussed above as well.

- As such, no drivers for AI and its Cloud infrastructure appear in today’s corrosion and cracking risk management. Of course, this observation doesn’t apply to other kinds of business challenges where the digitalization can be really helpful indeed.

Does this verdict mean we should leave the conventional RBI systems as they are, e.g. keep collapsing the damage data into a single ‘worst case’ point and keep using a relative inspection prioritization?

Certainly not! There is an opportunity to align the state-of-art integrity data with the cost-benefit analysis aspirations of the Asset Management, and truly optimize the integrity budgets:

Our solution: Statistical analysis of Equipment individual integrity data

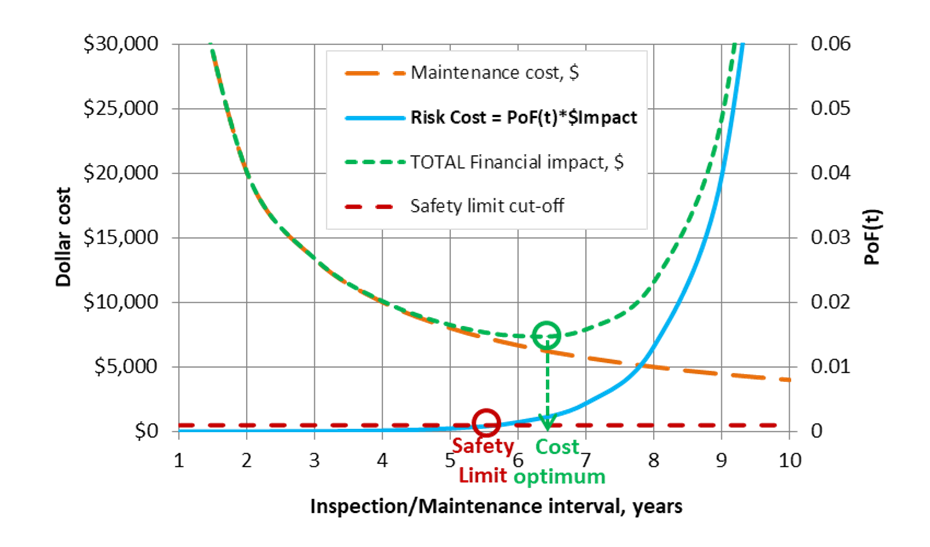

We have developed a 1st principle methodology for the long awaited upgrade of Asset Integrity Management. Since it involves a statistical analysis of corrosion and cracking data, it can also be viewed as a digitalization element not requiring a Cloud connection. It works as follows:

- Cost-benefit analysis of risk control options (and inspection intervals) for individual items requires the knowledge of $Risk cost evolution in the future

- It can be constructed from the equipment Probability of Failure predictions versus time, PoF(t) multiplied by the failure $Consequence, and also, a safety limit set on the PoF(t)

- The PoF(t) is found from a statistical distribution fitted to that individual equipment damage data (multi-point corrosion depth readings being a standard practice)

- Note the difference: we have developed a scientific method to statistically analyze the integrity condition of that very individual equipment from its actual inspection data, as opposed to the generic (and misleading) approaches of using allegedly ‘similar’ equipment failures.

To explain, if you visit a doctor, what service would satisfy you better? Googling for typical health problem of ‘similar’ patients and providing a generic advice? OR taking your individual health assessment and developing a relevant treatment plan? Surely, we do prefer an individual approach.

Same applies to individual equipment integrity and we have a know-how to do that. Next chart shows an example of our corrosion risk analysis constructed from a real life data:

This is effectively, a New RBI which harmonizes the interaction of Asset Management, Advanced Condition Monitoring and also offers a financial justification of the Digitalization tools.

Your Thoughts Please?

If you have any questions or comments, please feel free to leave them here or drop us a line.

Did I miss or misinterpret anything important for the Digitalization perspectives of static equipment?

Are you aware of success stories from digitizing Integrity Risk Management systems?

Would you advocate Pro or Contra for digitizing Integrity Engineering problems and why?

I hope you have enjoyed this reading and found something interesting for you. Stay tuned!